Overview

This document outlines the various ways Mach41 in-memory data platform can accelerate the performance , scalability and resilience of the OAuth module of Enterprise Platforms.

Problem Statement

OAuth 2.0 has become a widely adopted standard for implementing secure token-based access control. However, managing OAuth tokens efficiently in high-traffic systems presents several challenges, including ensuring high availability, resilience, and performance.

In a typical OAuth implementation, token validation often requires calls to an external authorization server or database. These repeated calls can introduce latency, increase the load on backend systems, and lead to potential bottlenecks or single points of failure. Additionally, maintaining consistency and ensuring the scalability of token storage in distributed systems further complicates the architecture.

To address these issues, organizations need a robust, distributed caching solution that can securely store and manage OAuth tokens across a cluster, ensuring rapid token validation, resilience against node failures, and scalability to handle increasing traffic loads.

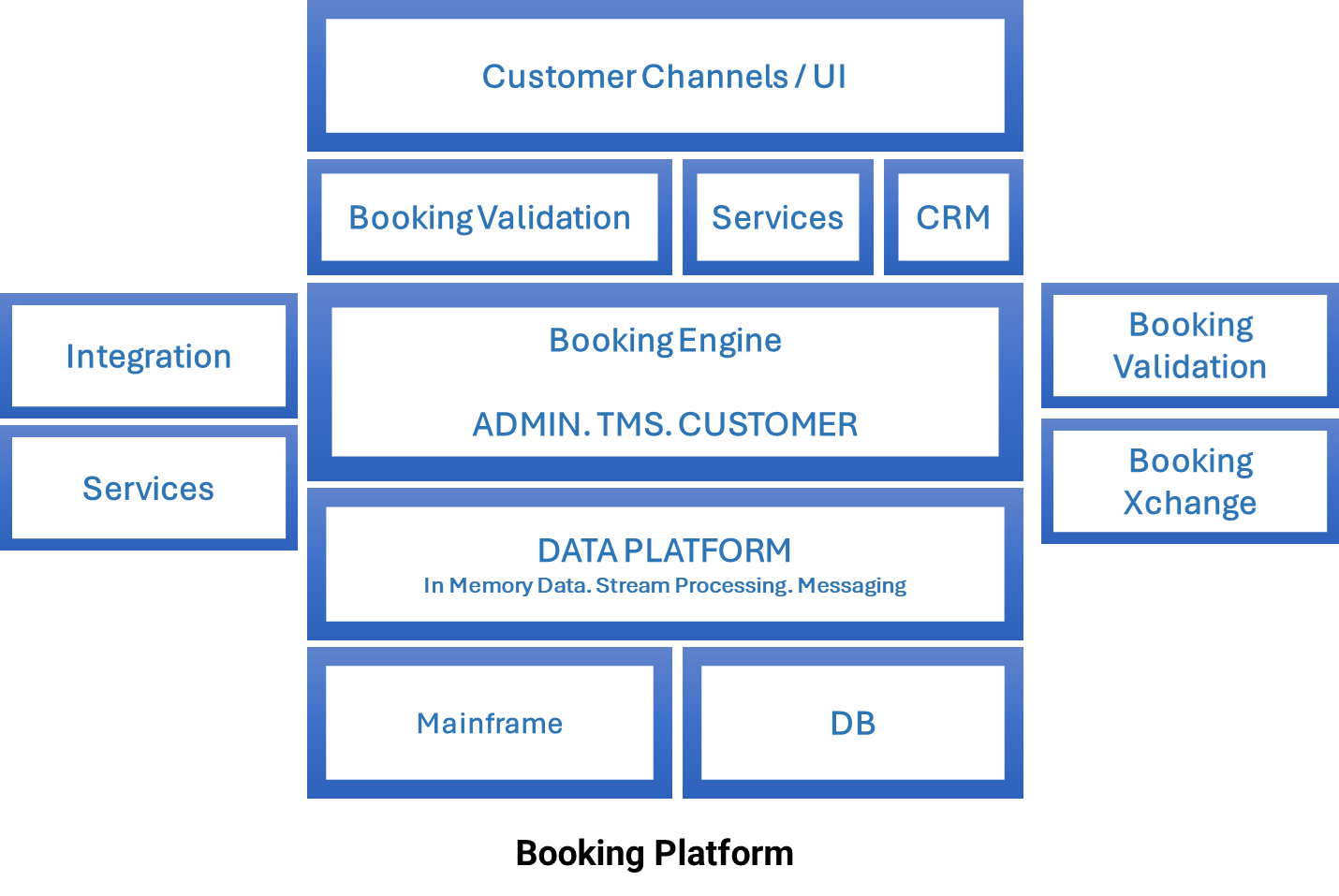

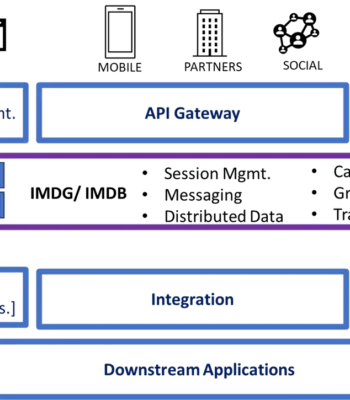

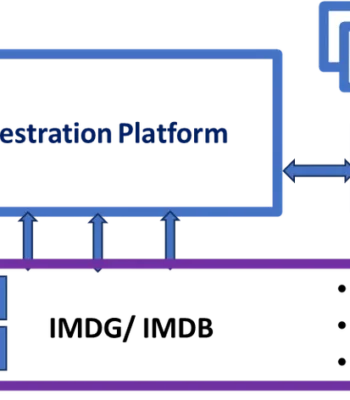

This case study explores how Mach41, a value added Hazelcast OpenSource platform, which is a distributed in-memory data grid, can be leveraged to cache OAuth tokens, providing a high-performance, highly available, and resilient solution for modern applications.

Solutions

In order to achieve this outcome effectively, the following key Strategies are employed. These ensure not just the core requirement, but also provide multiple additional benefits.

1. Reduced Latency by Token Caching

On a Mach41 Caching Cluster, tokens are cached and applications can validate access requests locally, significantly reducing response times and offloading traffic from the backend.

Benefits

- High Performance: Tokens are stored and retrieved in-memory, ensuring sub-millisecond response times for validation.

- Resilience and Availability: Hazelcast’s distributed architecture ensures seamless failover and data replication, maintaining uninterrupted service.

- Scalability: The cluster can scale horizontally, allowing the system to handle increasing loads without performance degradation.

- Expiration Policies: Built-in support for TTL (Time-to-Live) ensures tokens are automatically evicted after their validity period, reducing stale data and maintaining security.

Implementation Example:

IMap<String, String> tokenCache = dpInstance.getMap("oauthTokens");

tokenCache.put(accessTokenId, token, expirationTime, TimeUnit.SECONDS);2. Higher Security by – Distributed Rate Limiting

Rate limiting, limiting the number of calls from a source IP, ensure better protection against DDoS and similar high volume attacks, along with the ability for Paying customers to use the platform judiciously

Mach41, based on Hazelcast Data grid, as a distributed in-memory data grid, provides a robust solution for implementing distributed rate limiting that is consistent, scalable, and performant.

Benefits

Key features of this solution include:

- Resilience: Built-in fault tolerance ensures that rate-limiting data is replicated across the cluster, maintaining functionality even during node failures.

- Global Consistency: Rate limits are tracked in a centralized yet distributed manner, ensuring all instances share the same view of usage.

- High Performance: Rate-limit checks and updates are performed in-memory, delivering low-latency operations suitable for high-traffic systems.

- Scalability: Hazelcast clusters scale horizontally, allowing the rate-limiting solution to handle increasing request volumes without degradation.

- Flexibility: Customizable rate-limiting policies, such as per-user, per-IP, or per-endpoint limits, can be easily implemented and enforced.

Implementation Example:

IMap<String, Integer> rateLimiter = m41dpInstance.getMap("rateLimits");

int currentCount = rateLimiter.getOrDefault(clientId, 0);

if (currentCount >= MAX_REQUESTS_PER_MINUTE) {

throw new RateLimitExceededException();

}

rateLimiter.put(clientId, currentCount + 1, 1, TimeUnit.MINUTES);

Other Benefits

There are other benefits of doing this

A. Distributed Session Management

OAuth often integrates with sessions (e.g., for user login or SSO flows).

Implementation Summary

Use Hazelcast to store session data in a distributed manner.

- This eliminates reliance on a single server and ensures session failover.

- Store user session details (e.g., user roles, permissions) to reduce repeated database lookups.

B. “Near Cache” for “frequently accessed data“

Some OAuth data, such as client credentials or scopes, is frequently read but rarely changes.

Implementation Summary

Use Hazelcast Near Cache to store frequently accessed data close to the application instance for low-latency reads.

Configuration Example

near-cache:

name: "oauthClientCache"

in-memory-format: OBJECT

time-to-live-seconds: 3600

max-size: 1000C. Session and Token Revocation Synchronization

Token revocation (e.g., for logout or client deactivation) needs to propagate immediately across the system.

Implementation Summary

Use Mach41DP topic or event-driven architecture.

- Publish revocation events to a Hazelcast topic.

- All application instances listening to the topic will invalidate the respective tokens or sessions.

Implementation Example

ITopic<String> revocationTopic = m41dpInstance.getTopic("tokenRevocation");

revocationTopic.publish(tokenId);D. Optimized Database Writes and Reads

Use Hazelcast as a write-behind cache for token persistence. This reduces direct load on the database.

Implementation Summary

Batch database writes using Mach41 DP batching feature to improve performance.

E. Scaling OAuth Using Mach41 Clusters

High traffic in enterprise platforms demands horizontal scaling.

Implementation Summary

Use Hazelcast’s distributed architecture to ensure all nodes in the OAuth service cluster share consistent data.

Conclusion

As distributed applications grow in complexity and scale, ensuring secure and efficient access control and traffic management becomes critical. This case study explores the use of Hazelcast, a distributed in-memory data grid, to address two key challenges: caching OAuth tokens for reduced latency and implementing distributed rate limiting for consistent traffic control.

By leveraging Hazelcast’s in-memory architecture, organizations can reduce the overhead of token validation by caching OAuth tokens across the cluster, enabling sub-millisecond response times and improving system efficiency. Additionally, Hazelcast provides a robust foundation for distributed rate limiting, ensuring consistent enforcement of traffic quotas, global scalability, and resilience against node failures.

Key outcomes of this solution include:

- High Performance: Token validation and rate-limiting operations are performed in-memory for minimal latency.

- Scalability: Hazelcast’s distributed nature supports seamless scaling to handle increasing traffic loads.

- Resilience and Availability: Built-in fault tolerance ensures uninterrupted operations even during failures.

- Enhanced Security: Features like TTL for token caching and customizable rate-limiting policies align with best practices in secure access control.

This case study demonstrates how Hazelcast can empower organizations to build high-performing, scalable, and secure systems, ensuring an optimal user experience while managing the complexities of modern distributed applications.